Mitsubishi Electric Corporation has developed a teaching-less robot system technology to enable robots to perform tasks, such as sorting and arrangement as fast as humans without having to be taught by specialists. The system incorporates Mitsubishi Electric’s Maisart®1 AI technologies including high-precision speech recognition, which allows operators to issue voice instructions to initiate work tasks and then fine-tune robot movements as required. The technology is expected to be applied in facilities such as food-processing factories where items change frequently, which has made it difficult until now to introduce robots. Mitsubishi Electric aims to commercialize the technology in or after 2023 following further performance enhancements and extensive verifications.

Key Features

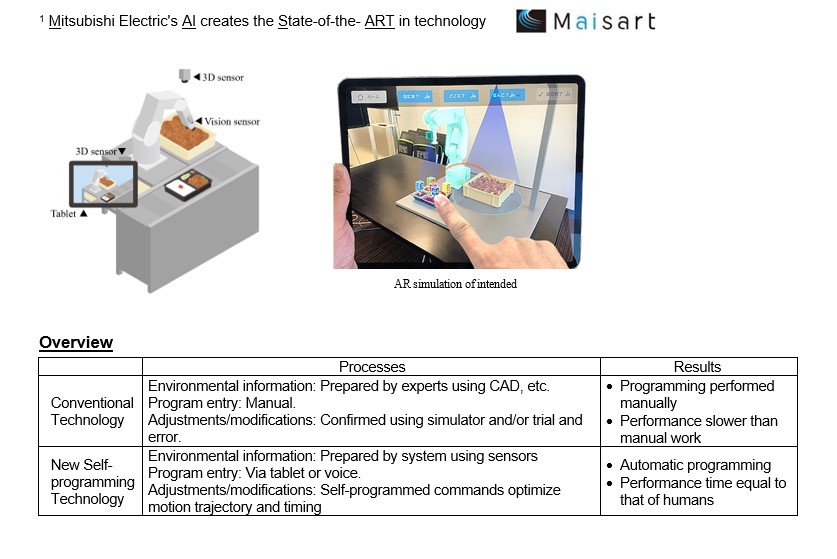

1) Robot movements self-programmed/adjusted based on simple commands from operator

- Robot movements are self-programmed and self-adjusted in response to simple commands communicated by voice or via a device menu by a non-specialist operator.

- Proprietary voice-recognition AI accurately recognizes voice instructions even in noisy environments for the first time in industrial robot manufacturers .2

- Sensors detect 3D information (images and distances) about work area, which are processed with augmented reality (AR) technology for simulations that allow operator to visualize expected results.

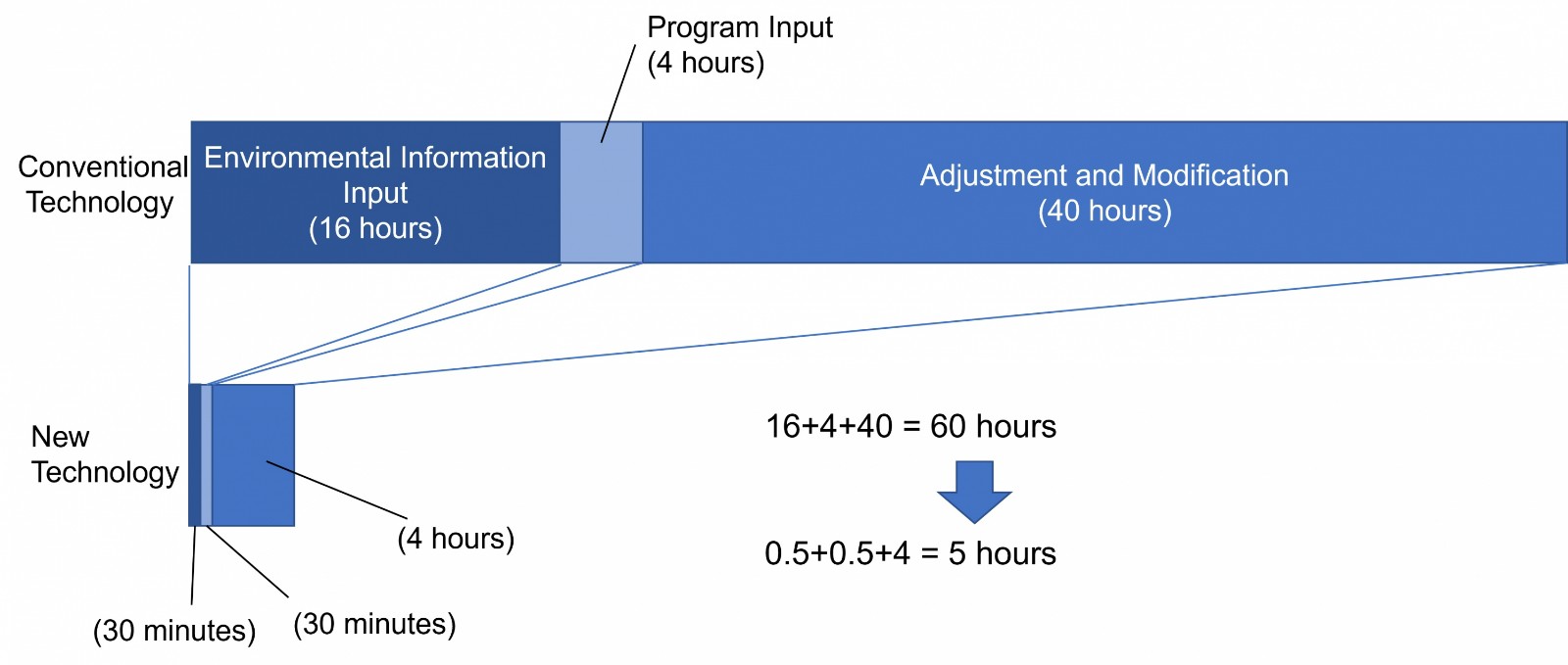

- Programming and adjustments require just one-tenth or less time than conventional systems.3

2 Mitsubishi Electric survey of instruction systems deployed by industrial robot manufacturers (as of February 28, 2022)

3 In-house comparison

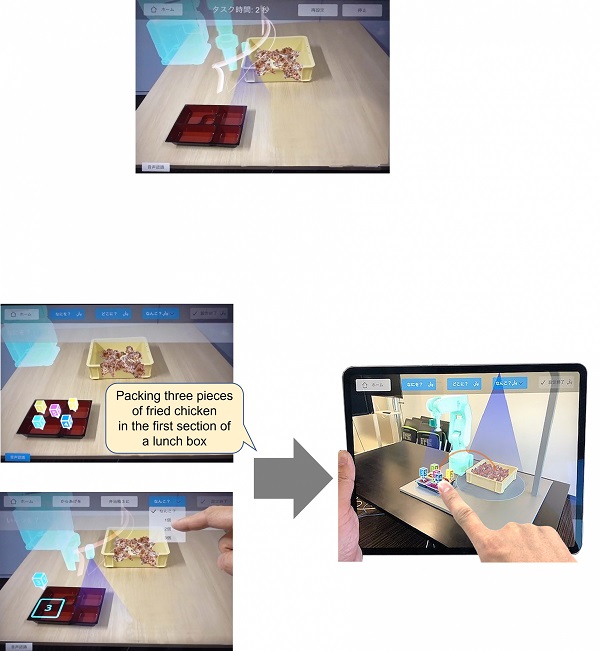

The system, responding to voice or menu instructions, scans the work surroundings with a three-dimensional sensor and then automatically programs the robot’s movements. Movements can be easily fine-tuned via further commands from the operator. Mitsubishi Electric’s unique voice-recognition AI, highly accurate even in noisy factories, offers the first voice-instruction user interface deployed by industrial robot manufacturers. For example, in a food-processing factory, a non-specialist could instruct a robot simply by saying something like "Pack three pieces of chicken in the first section of the lunch box." The AI can infer implied meanings if a voice instruction is ambiguous, such as determining how much motion compensation is required if instructed "A little more to the right." Alternatively, a tablet equipped with menus can be used to issue instructions or to select categories such as “where,” “what” and “how many” to generate simple programs.

The tablet also can be used to view stereographic AR simulations that allow the operator to confirm that instructions will have the intended results. For added convenience, the system also can recommend the ideal positioning of a robot in an AR virtual space without requiring a dedicated marker, another industry first.4

By enabling the self-programming of robot movements, including obstacle avoidance, and the system reduces the workload associated with gathering environmental information, inputting data and confirming operations using simulators and/or actual equipment. As a result, the system can complete these cumulative processes in just one-tenth or less time compared to conventional methods. In view of such advantages, the system is expected to support the automation of work sites that are not readily suited to robots, such as food-processing factories where items change frequently, requiring robot programs to be updated for each change.

4 Mitsubishi Electric survey of robot models incorporating AR virtual spaces (as of February 28, 2022)

Fig. 2 Voice entry and touch-screen entry methods (renditions)

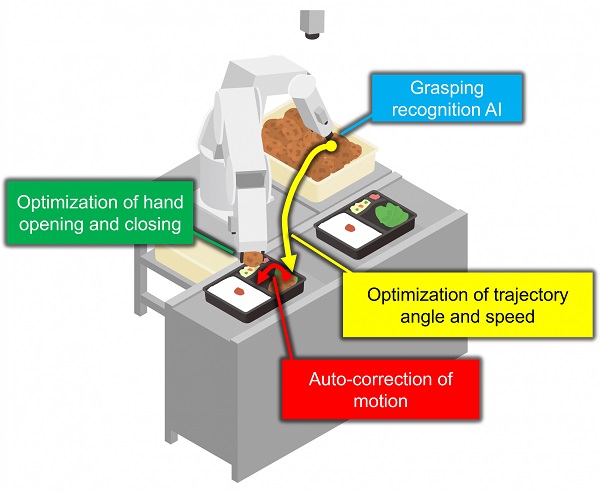

2) Technology-optimized movements enable robot to perform tasks as fast as humans

The self-programming system generates commands to control robot movements, including to avoid obstacles, in order to perform work tasks as fast as humans (minimum 2 seconds to grasp item5).

The system also adjusts and optimizes the timing of opening and closing robotic hands to reduce wasted time.

Using visual information from a camera attached to the robot’s hand, the system automatically corrects movements, including when the position of the robot or the object to be placed changes.

5 Time required to grasp and place a part in a designated place

Conventionally, increasing a robot's operational speed requires time to realize the desired trajectories because the operator must use a simulator and/or the actual robot to determine the best conditions. In response, Mitsubishi Electric has developed a trajectory-generation technology to optimize robot movements using information about surrounding obstacles and robot performance. The company has also developed an acceleration/deceleration-optimization technology that automatically generates a velocity pattern to achieve the shortest possible arm-travel time within the permissible range of force that can be exerted by the robot. Both technologies help to shorten the time required to adjust the robot’s movements.

Optimizing the timing of hand opening/closing also helps to reduce work time. Conventionally, such adjustments are performed manually using simulations and operating the robot. The new technology. however, automatically adjusts and optimizes this timing according to the characteristics of the hand and the object to be grasped, thereby eliminating lengthy manual adjustments while also improving work efficiency.

In addition, the grasping-recognition AI and a three-dimensional sensor fixed above the system quickly determine the best position for grasping, thereby reducing wasted time. Furthermore, visual information from a camera attached to the robot hand allows the robot to self-correct its movement if the position of the robot or the target object shifts.

By optimizing robotic arm and hand movements, Mitsubishi Electric’s new technology enables robots to work as fast as humans, i.e., a minimum 2 seconds to grasp an item and place it in a designated place.

Fig. 3 Robot work time equivalent to that of manual labor

Other Features

Integrated system management of peripheral information

To simplify the process of adding custom functions, the system supports the Robot Operating System (ROS) software platform. Also, Mitsubishi Electric has developed a ROS-Edgecross6 linkage function to centrally manage information on Edgecross, ROS and the entire system. Edgecross connectivity and multi-vendor capability simplify the monitoring and analysis of entire production lines for enhanced productivity and quality.

6 Japan-based open software platform for edge computing combining FA and IT